On-The-Move Language Translation Via A Phone App And A Custom Designed Headset - AI Hardware (C++, Arduino, Python, Flask)

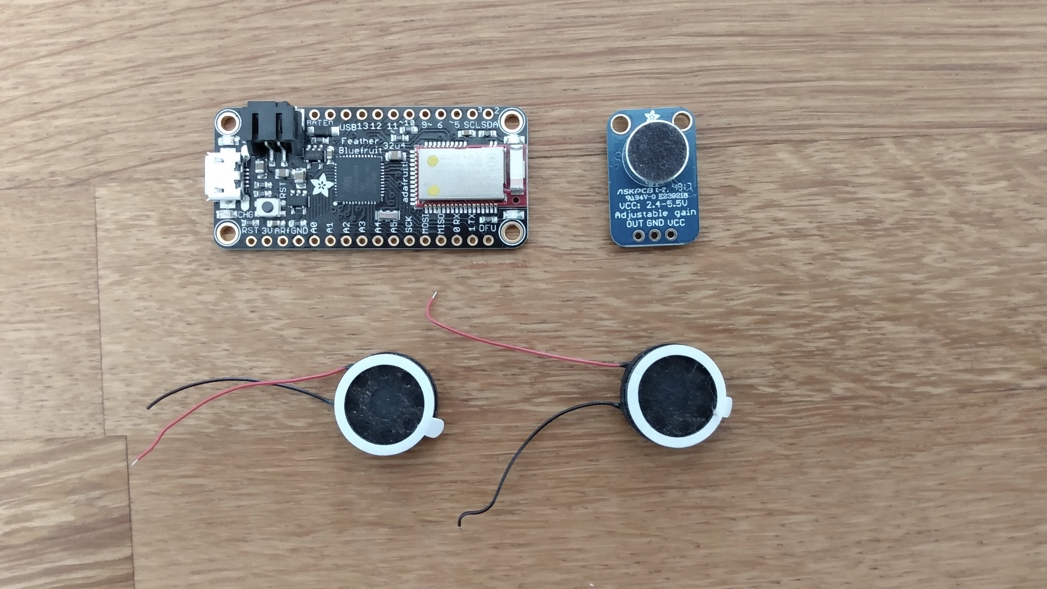

Arduino is an excellent microcontroller for building product prototypes. I had experimented with it during school and was familiar with how it worked. For this project, I purchased an Adafruit Feather 32U4 Bluefruit LE microcontroller, which functions similarly to an Arduino microcontroller. Along with it, I used a breadboard, two speakers, and one microphone.

All the hardware components, small enough to fit in a wearable device.

Software

For this project, I had to develop three pieces of software. First, I needed to write the C# script that would run on the Adafruit microcontroller. Second, I had to create an Android phone app that would run on my phone. Third, I had to build a Python Flask server that could receive audio, translate it, and send it back. I wanted the system to be capable of translating a foreign language to a native language and vice versa.

C++ Script

This script needed to be capable of receiving the analog input from the microphone and converting it into a pulse-code modulation (PCM) file. It then had to send this PCM file to the phone app. An added complication was the limited memory storage of the Adafruit microcontroller, requiring the analog data to be sent to an SD card before being transmitted to the phone app. In the initial versions of the system, I created the PCM file by sampling the analog audio stream at uniform intervals and sending a collection of these samples to the phone app. The server would then add headers to the PCM file so that it could be read as a waveform audio file (WAV), supported by the language translation API used on the server. In the end, this system worked, and the server was able to convert the PCM file created from raw analog audio into a WAV that could be played back and translated. The challenge was sending the collection of samples to the phone app, as the Adafruit microcontroller did not have sufficient RAM to store a large variable. It is worth noting that the phone app could receive the raw analog bytestream from the microphone in real-time via Bluetooth, so arguably there was no need to save it as a variable on the microcontroller, as it could be stored as a variable on the phone app. However, the software I used to create the app, MIT App Inventor, was a visual editor with limited programming functionality. I did not have time to build an Android app in a native language, so I searched for other ways to send the PCM file to the phone app.

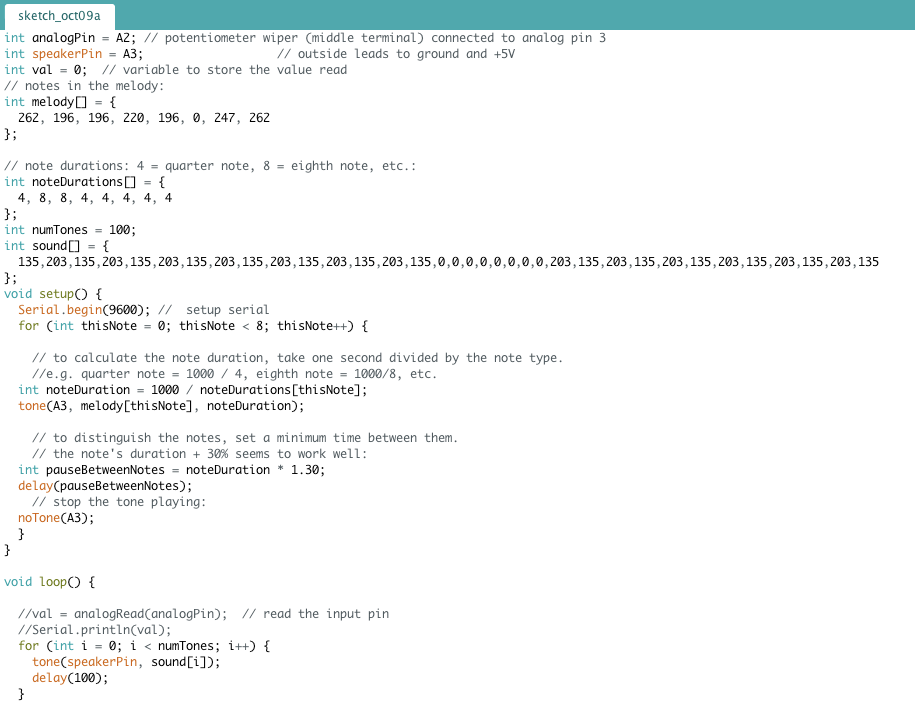

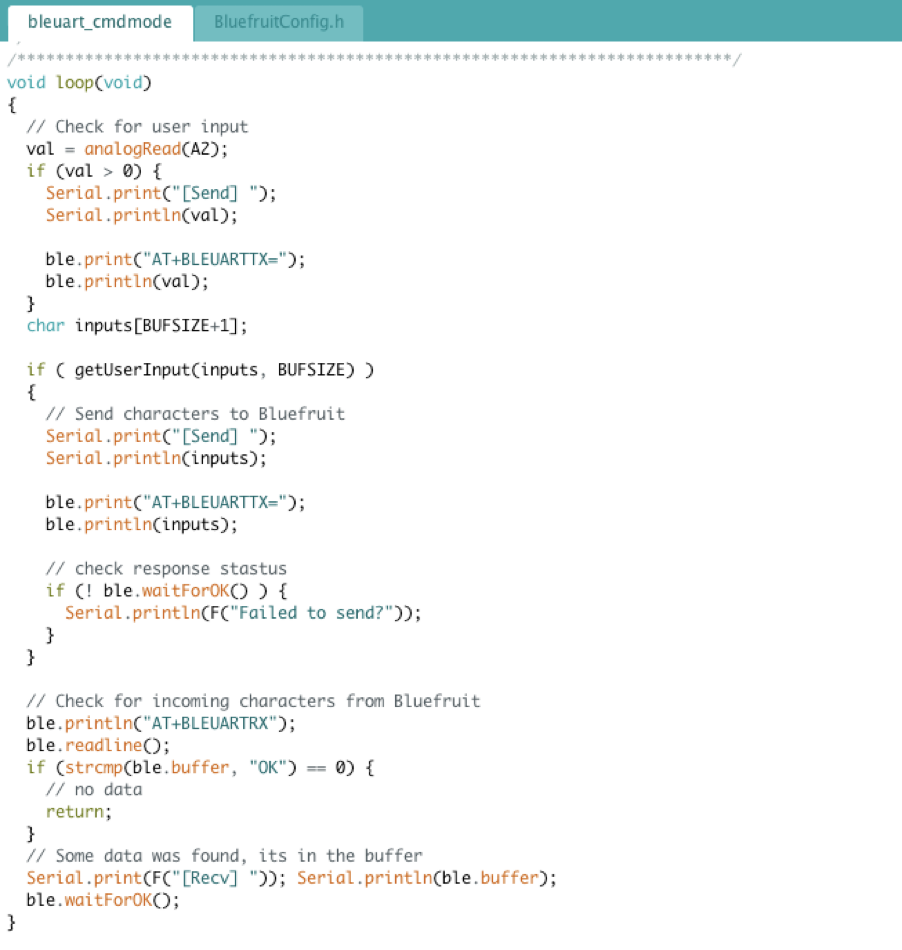

Testing the speakers.

Working with Adafruit’s Bluefruit library.

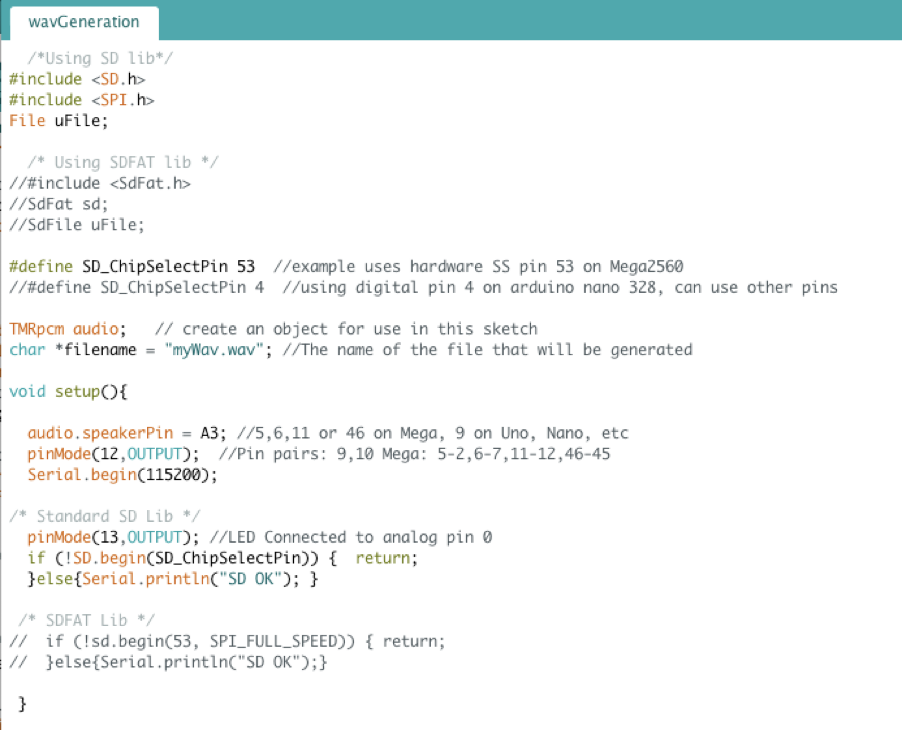

Eventually, I found the open-source TMRpcm GitHub repository by TMRh20, which provided a solution to this problem. Although the repository primarily focused on playing back PCM/WAV files from an SD card, it also included code for saving analog input from a microphone to the SD card as a PCM file.

Experimenting with the TMRpcm library.

If I could use this framework and find a way to transfer the WAV files from the SD card to the phone app via Bluetooth, the C++ portion of my project would be complete.

Android Phone App

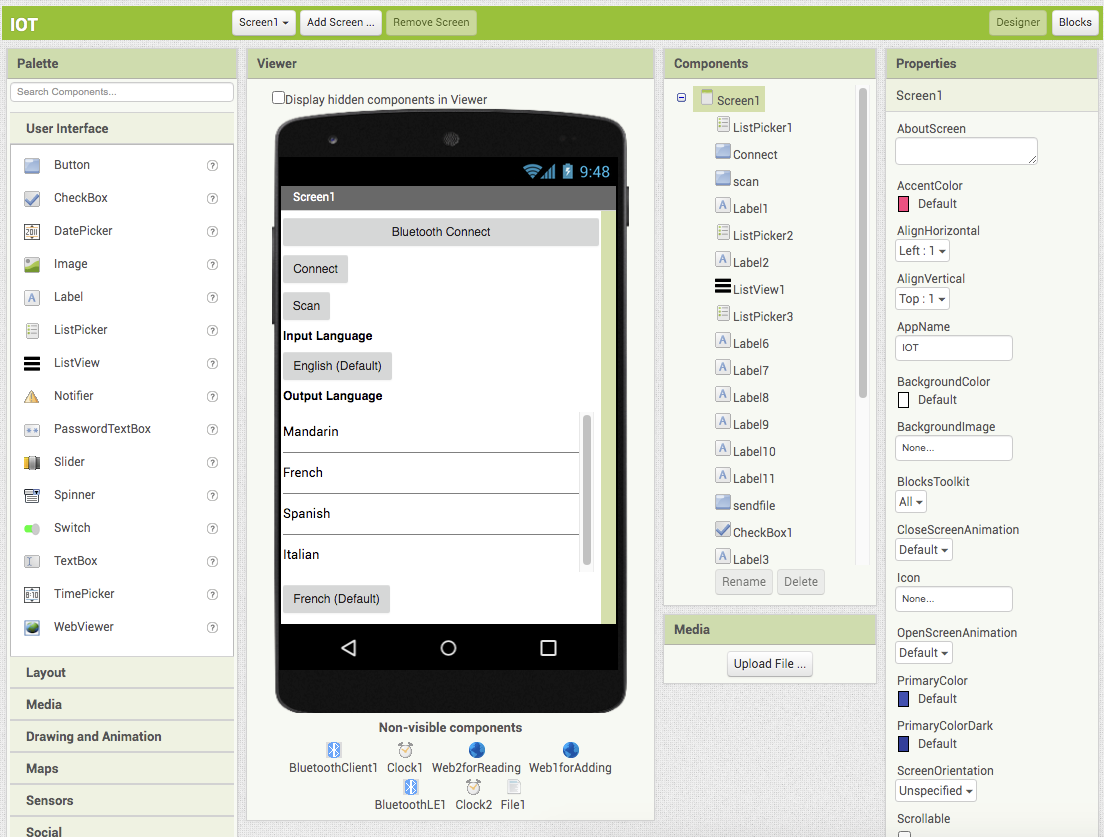

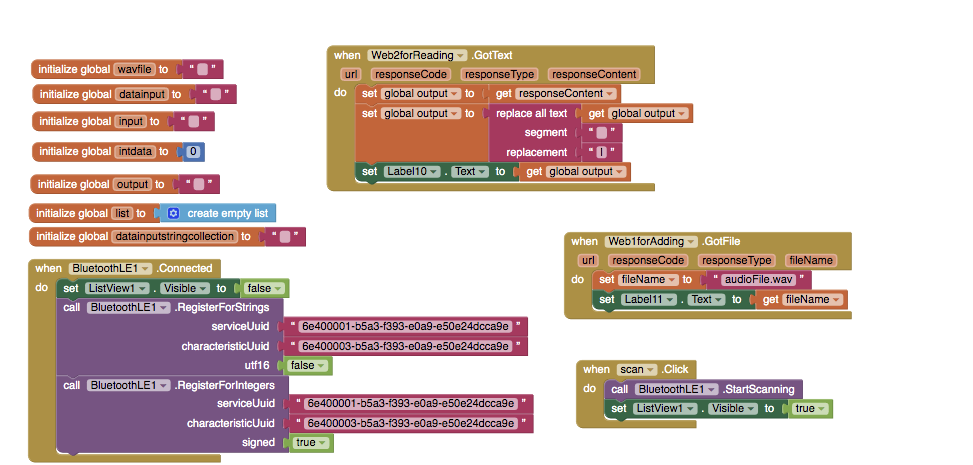

As mentioned earlier, I opted for MIT App Inventor to build this app because I wanted to develop and test my prototype quickly. I had previously used MIT App Inventor to create an Android app while in school and appreciated its ease of use.

The UI of the App.

The block code of the App.

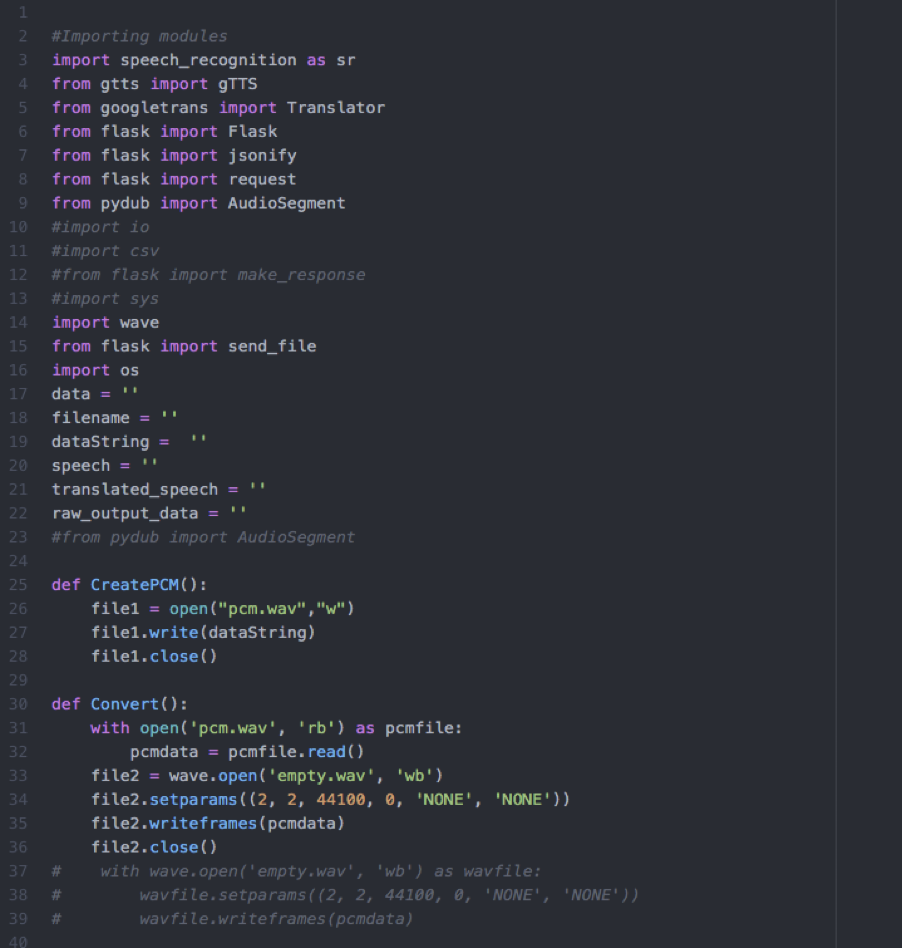

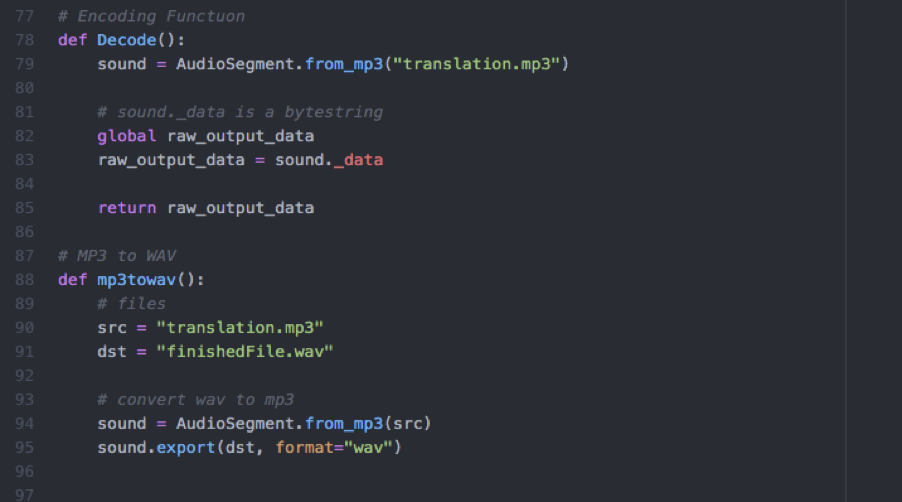

Flask Web Server

For my Flask server, I chose to use a service called PythonAnywhere because it is free to use. I employed the ‘speechrecognition’ library to recognize the spoken language and convert it to text. Then, I used the ‘googletrans’ library to translate the text to the desired language. Next, I utilized the ‘gTTS’ (Google Text to Speech) library to convert the text into speech, saved as an MP3 file. Finally, I converted the MP3 file into a WAV file, which I returned to the Android phone app.

That was my experience attempting to build an in-ear language translation device. I certainly learned that writing software for audio is complex and often under-documented in public libraries. Nevertheless, if I had managed to get the Adafruit microcontroller to work as intended, I believe the device would have functioned. Whether it would have been able to consistently translate fast-moving conversations at a distance is another question entirely.