Percy - Automatic Receipt & Invoice Data Extraction

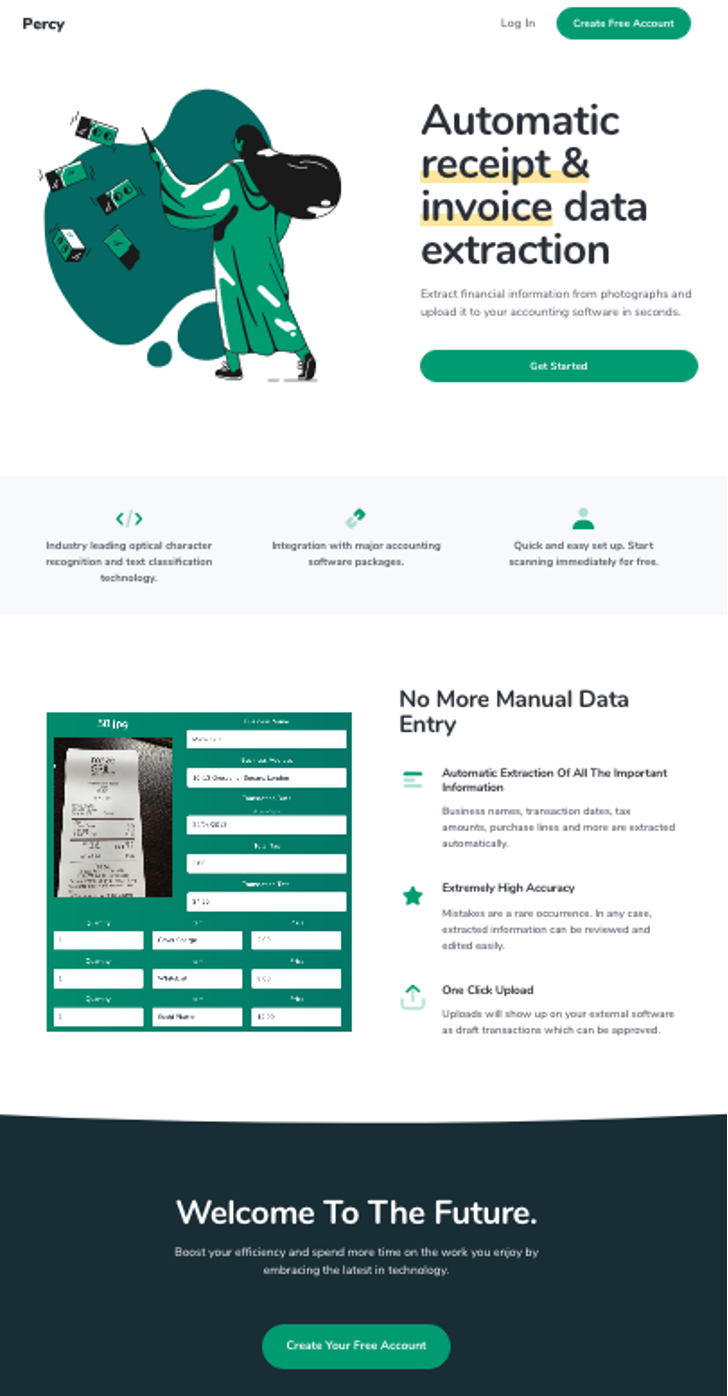

From March to July 2020, I built a web application called Percy. It allows accountants to automatically extract financial information from receipts and invoices by simply uploading a photograph of the document. The core technology that makes this happen is a combination of an advanced machine learning optical character recognition (OCR) engine and a word categorization system that finds the financial information in the extracted text. This is a challenging task because the most advanced publicly available OCR engines struggle with interpreting the combination of numbers and letters present in receipts. To solve this problem, I had to build a smart word categorization system that could understand the nuances of the output from the OCR engine. Overall, I am proud of the product, as it accurately extracts financial information from well-lit images taken on a flat surface and with high enough definition to be human-readable. If I were to continue working on the product, I would seek to improve the machine learning system that the OCR engine is built on to enhance the accuracy of the output. I believe that if this could be improved, it would significantly enhance the quality of the financial information extraction system. Truthfully, my work on Percy was not so much an exercise in building a financial extraction system as it was learning how to build a fully functional, strongly branded web application from start to finish. For this reason, this blog post will focus on the full-stack development process that I used to create the application. If you wish to test my application yourself before reading this post, head over to percy.app, create an account, and upload a few receipts/invoices. While building the app, I worked hard to ensure that it is completely secure from a cybersecurity perspective so that all users’ personal and financial information is safe.

Origins of Percy: March 2020

The original mission of this application was to utilize the latest developments in machine learning to completely automate all the manual tasks that accountants have to carry out on a daily basis. During the summer of 2018, I spent three months working as an intern in the finance team for a start-up called Velocity Black. They had real problems managing the high number of transactions they handled daily. For this reason, they heavily relied on interns and accounting assistants to process the receipts and invoices and reconcile bank accounts. The issue was that this is an incredibly boring and difficult task that is hardly fit for humans. Not surprisingly, those that joined the accounting team in these roles did not stay for long. The following year, during my internship for Whave in Uganda, I saw a similar occurrence where an employee had to travel to the head office to spend a day a week adding receipts and invoices to the account software. Seeing the business need for automation in this area, I set out to build software that automated these human-unfit accounting tasks.

I really wanted my app to have a strong, memorable brand. As my software was originally going to be an artificially intelligent accounting assistant, I thought giving it a human name would work well. It is nowadays incredibly hard to find a short, memorable domain name, so I spent a while searching for something that worked. I’m certainly happy with the name Percy, as it does sound somewhat related to accounting and is definitely memorable. Conveniently, it is also generalizable enough that I could choose to use this domain name/brand for a different project in the future if I wish.

Version One

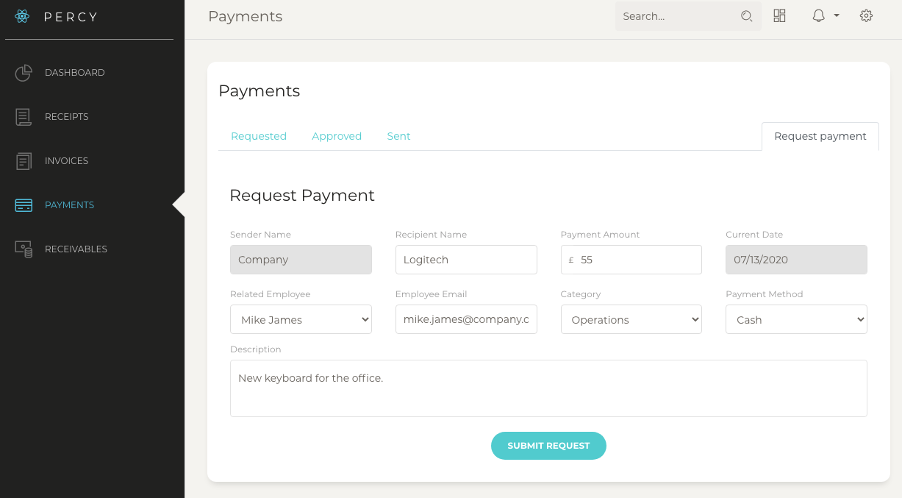

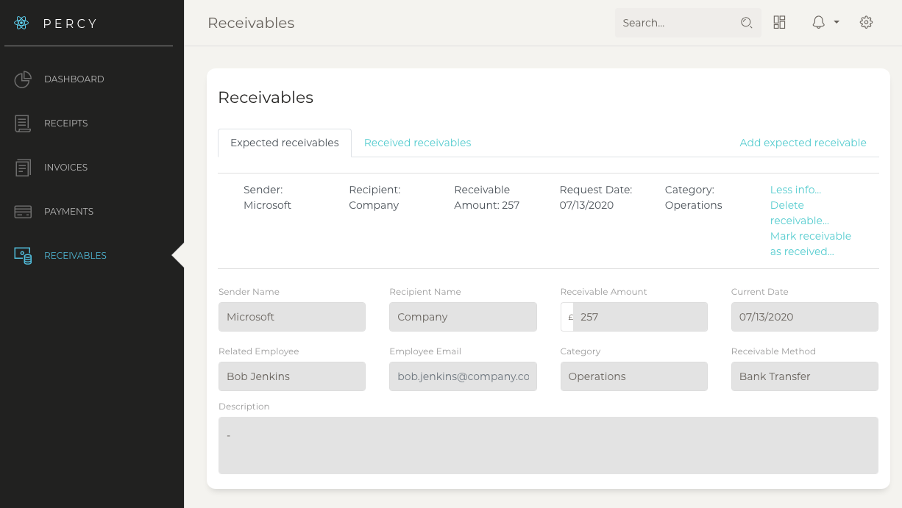

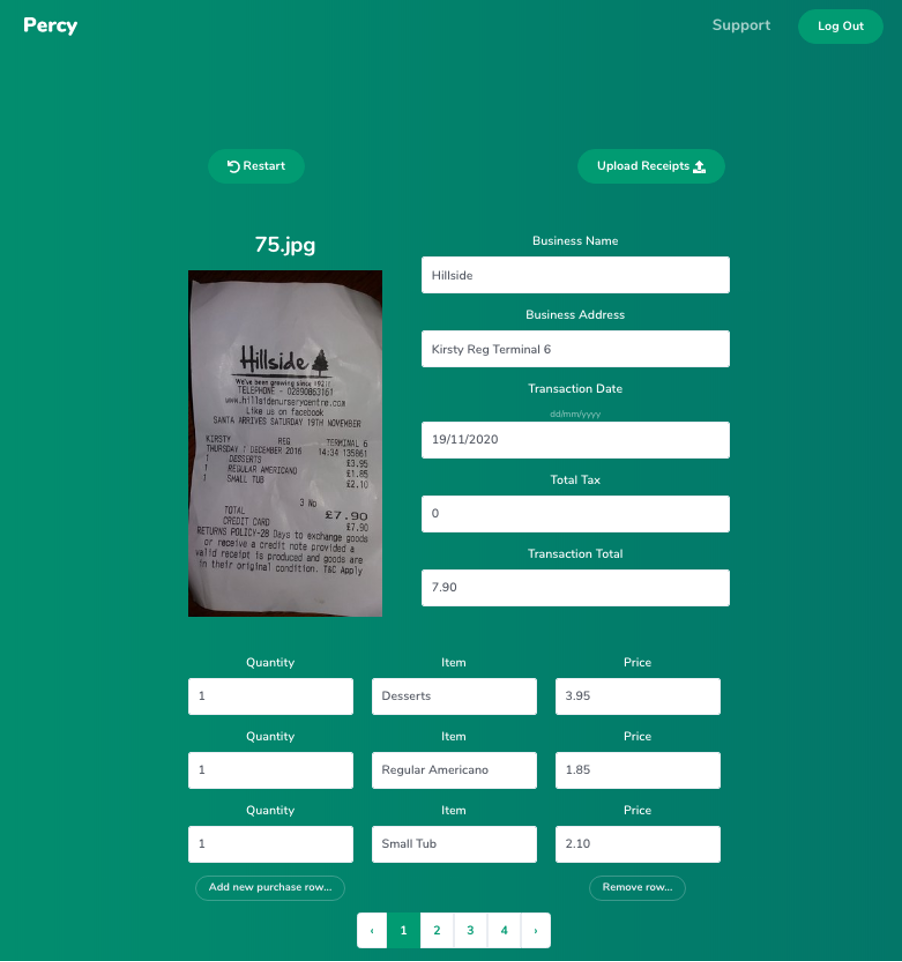

The first version of Percy was a React/Node/Express app that just had the core system. It allowed you to notonly extract information from receipts & invoices, but also included a system for monitoring trade receivables and trade payables. As it did not have a database system yet, the images and extracted text were stored on the server. The hardest part by far of building the React component was dynamically displaying the photos of the extracted receipts with their relevant financial information from the state. This was made increasingly difficult by two functionalities I built: adding and removing rows and displaying errors when the user added the wrong type of information into boxes. This had complicated implications for state management. It culminated in a maze of countless ‘undefined’ errors which I had to navigate through. At the completion of the first version, bugs were still present in the system. However, in later versions, I managed to make this intricate system work perfectly, and this is something I am proud of.

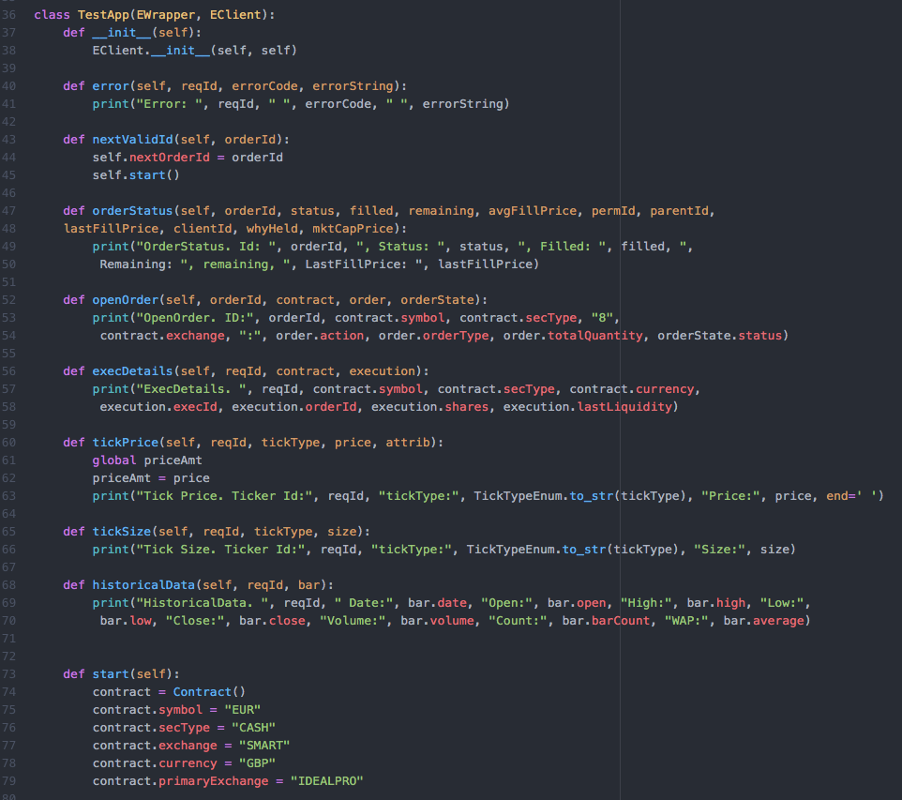

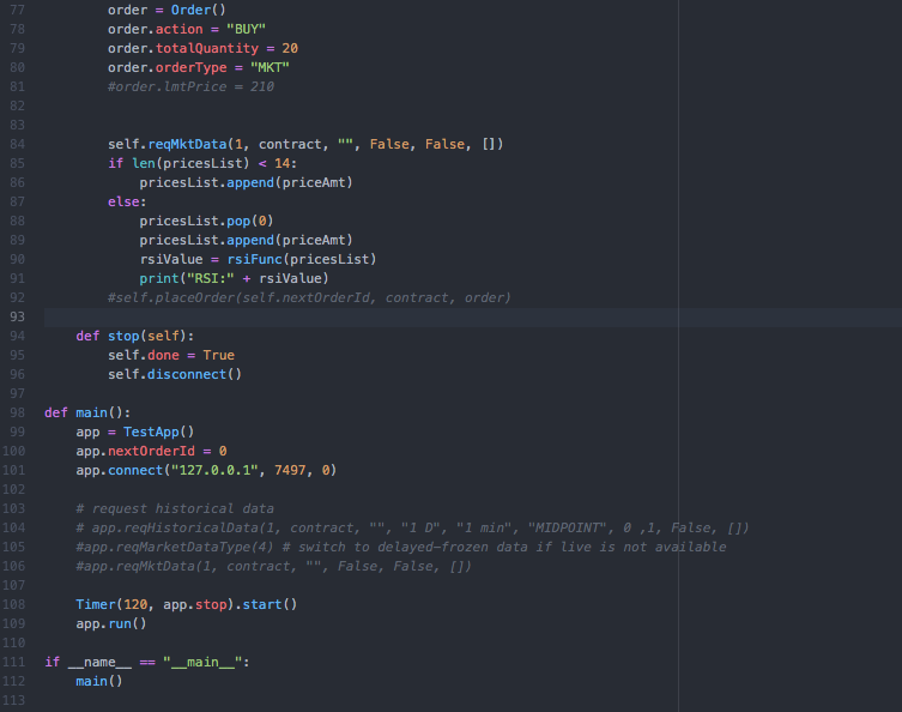

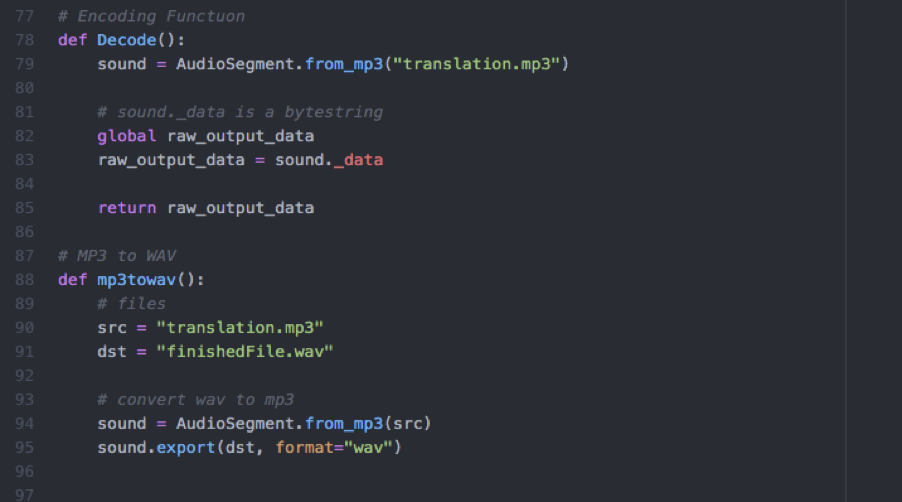

The automated receipt and invoice data extraction system worked by sending users’ images from the Node.js server to a Python Flask server, which carried out the OCR and word categorization processes. The output was then sent as a JSON file back to the Node.js server, which saved the outputted text and sent the file paths to the React component. The user could then edit the output and add/remove rows.

The Finished Product

After the first version, I decided to make Percy focus only on the automated receipt & invoice data extraction component. For this reason, I switched from a purely ‘create-react-app’ to a static HTML website that used an Express server, a Python Flask server, a MongoDB database, and a React component embedded into the static HTML. Web developers might describe it as a ‘MERN’ app.

The homepage

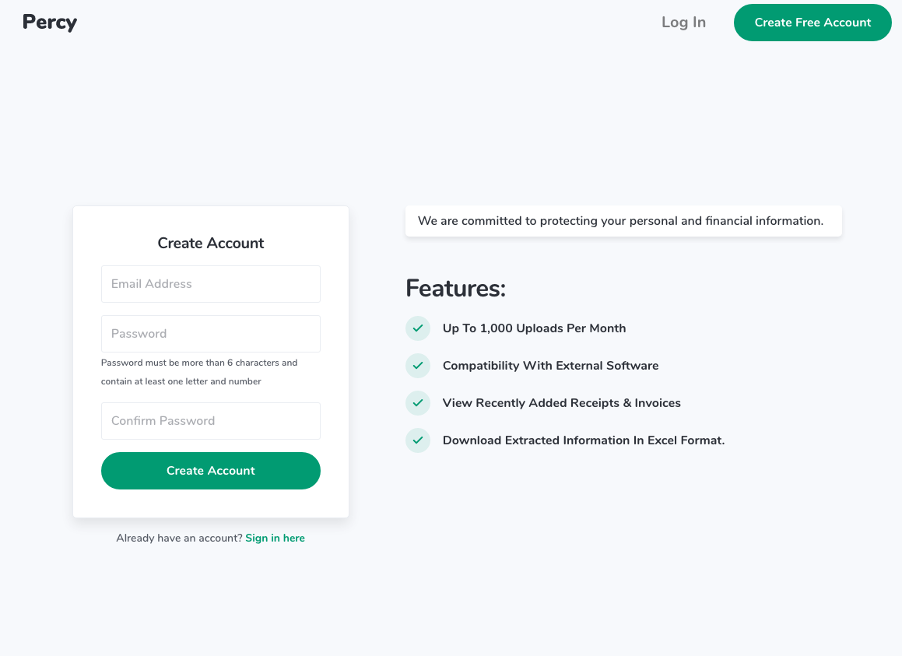

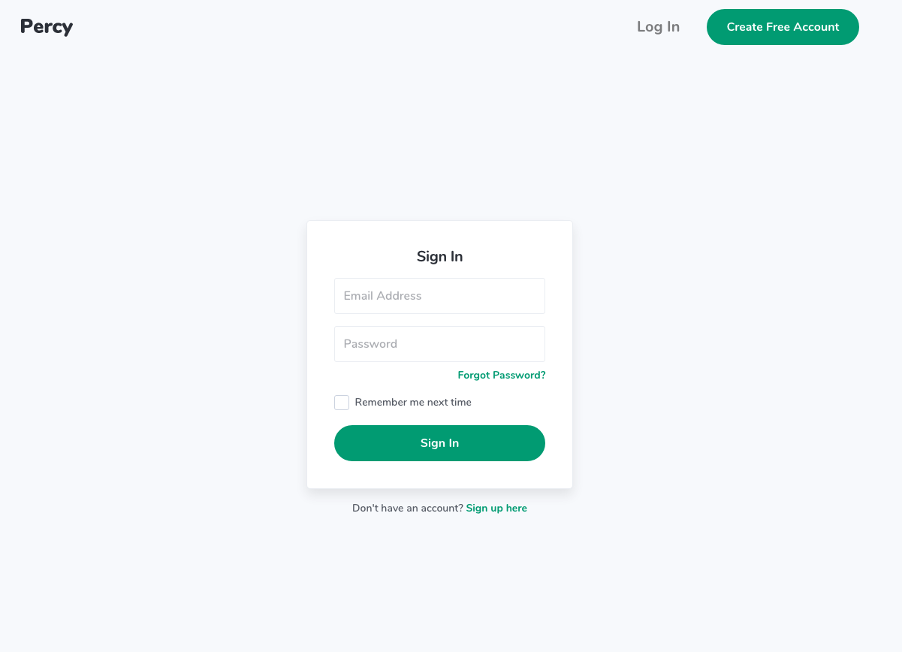

The sign-up page, with working account verification tokens and forgotten password resets.

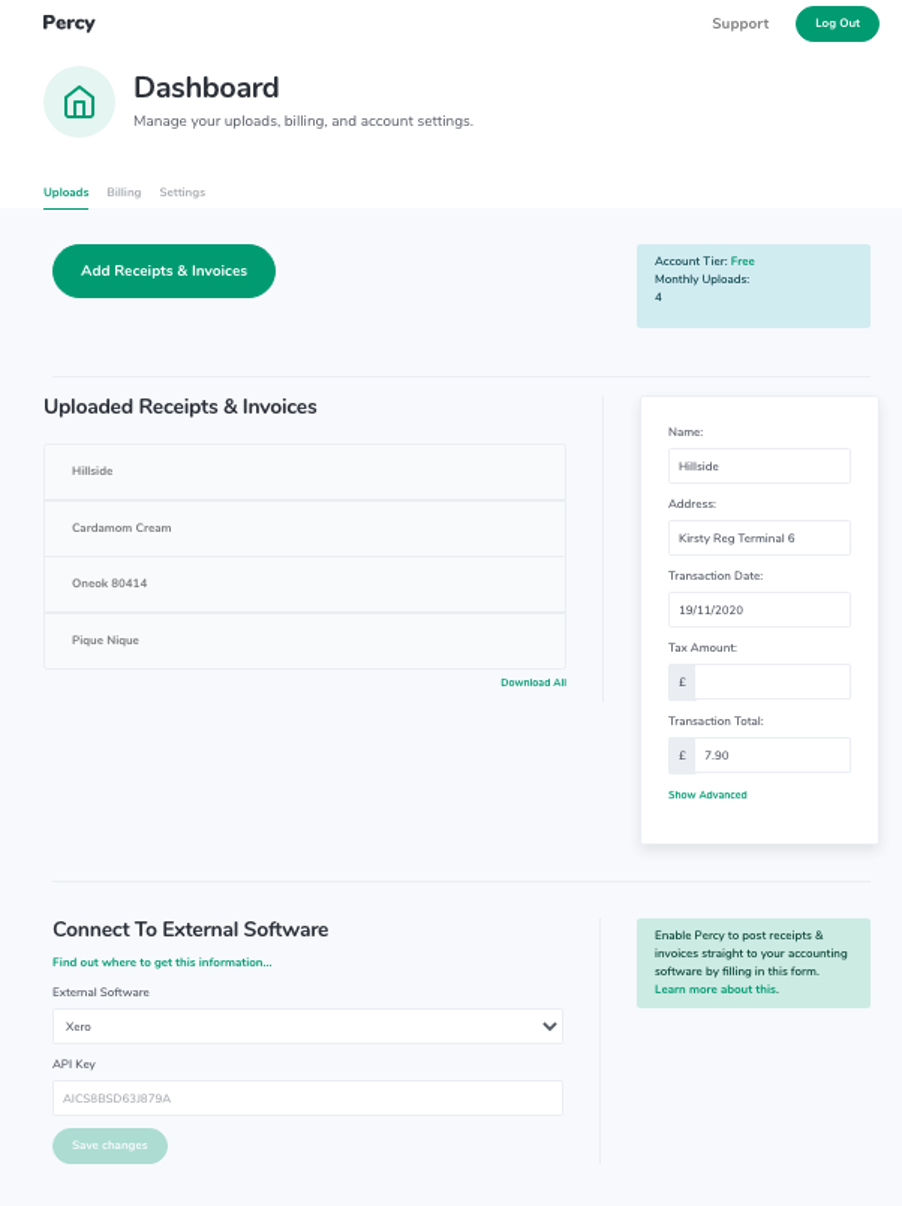

The dashboard page, where you can view previously uploaded receipts/invoices or update your billing/account settings. You can also download previously uploaded receipts as a CSV file that can be opened in Microsoft Excel. Connect your accounting software package to automatically have the receipts/invoices sent to your accounting software with the extracted information.

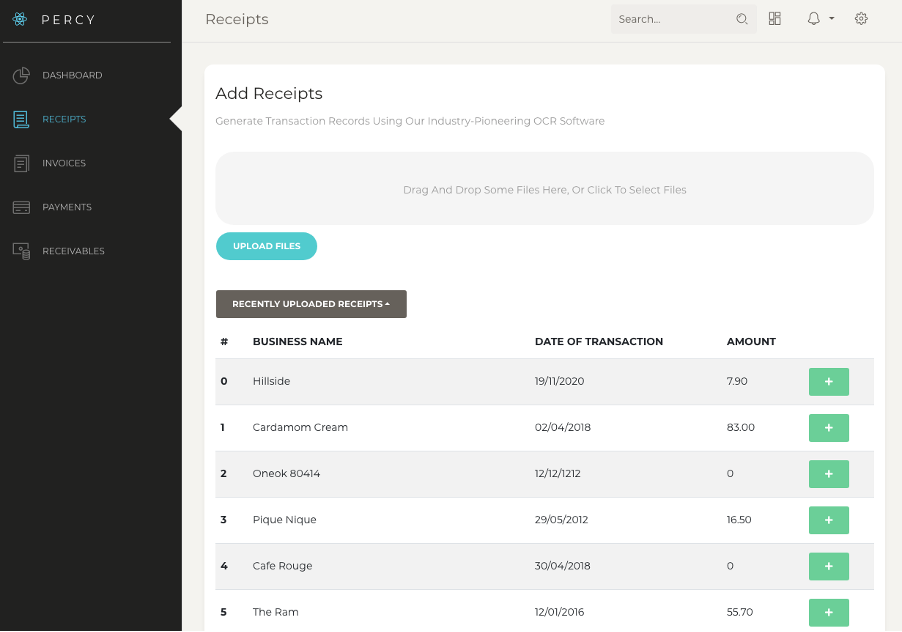

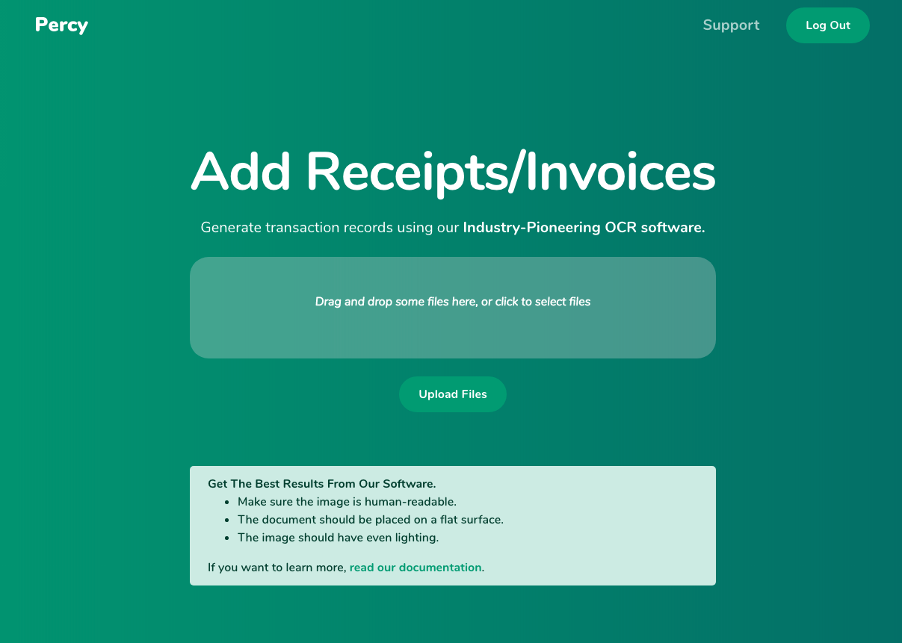

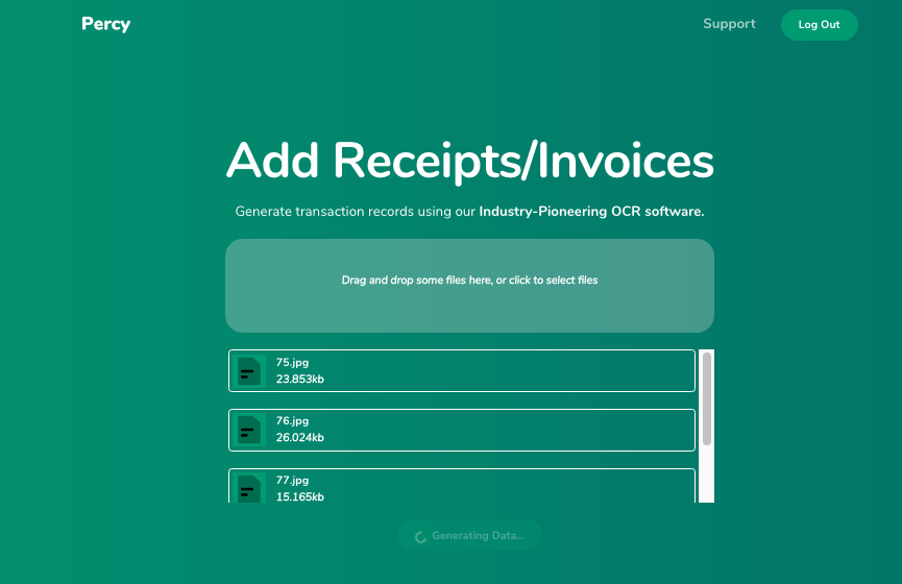

The upload page.

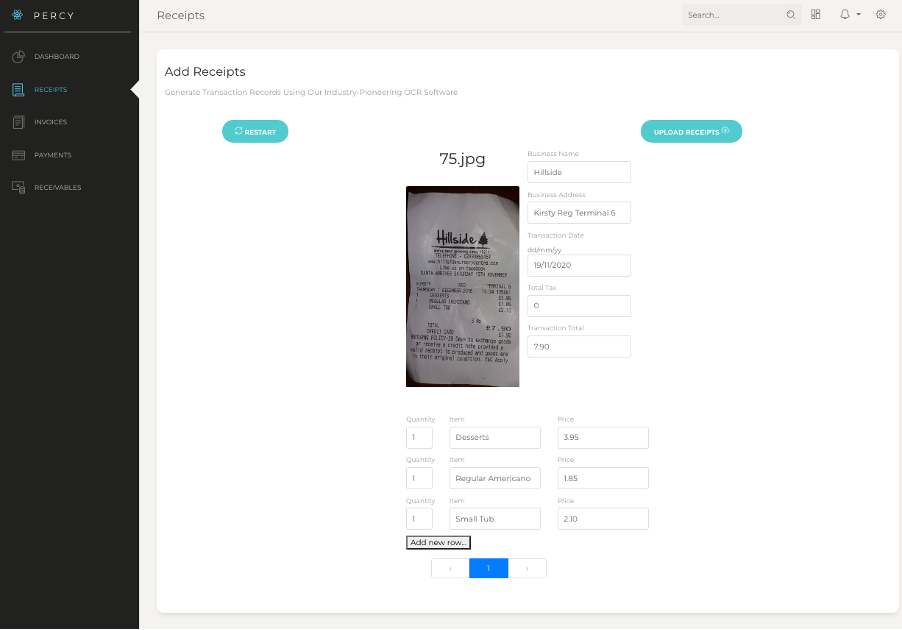

The extracted information page, where you can view and edit the extracted information, and upload it to your Percy account and/or connected external software.

The support page.

That concludes this blog post about Percy. I hope you have enjoyed learning about my web app. Don’t forget you can actually go and see it in action here.

That concludes this blog post about Percy. I hope you have enjoyed learning about my web app. Don’t forget you can actually go and see it in action here.

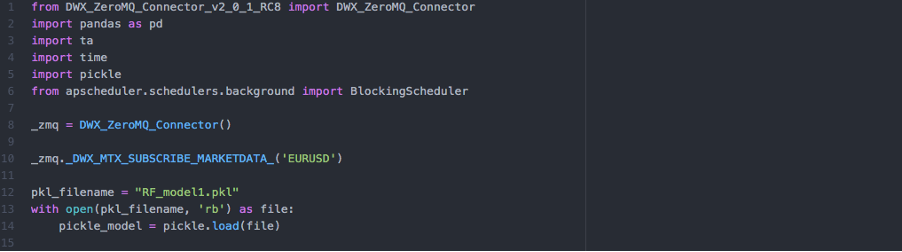

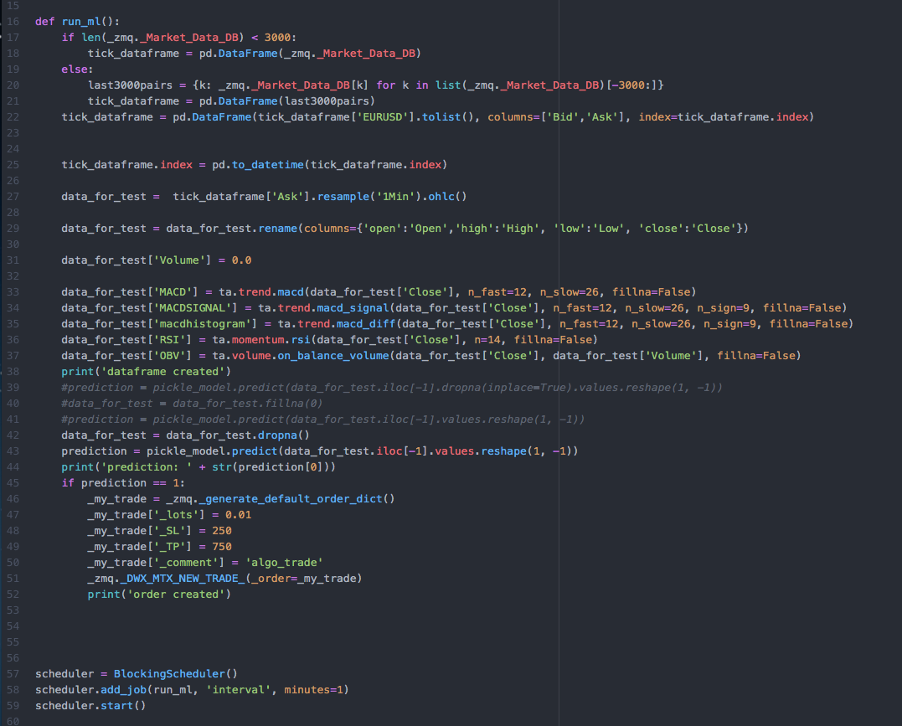

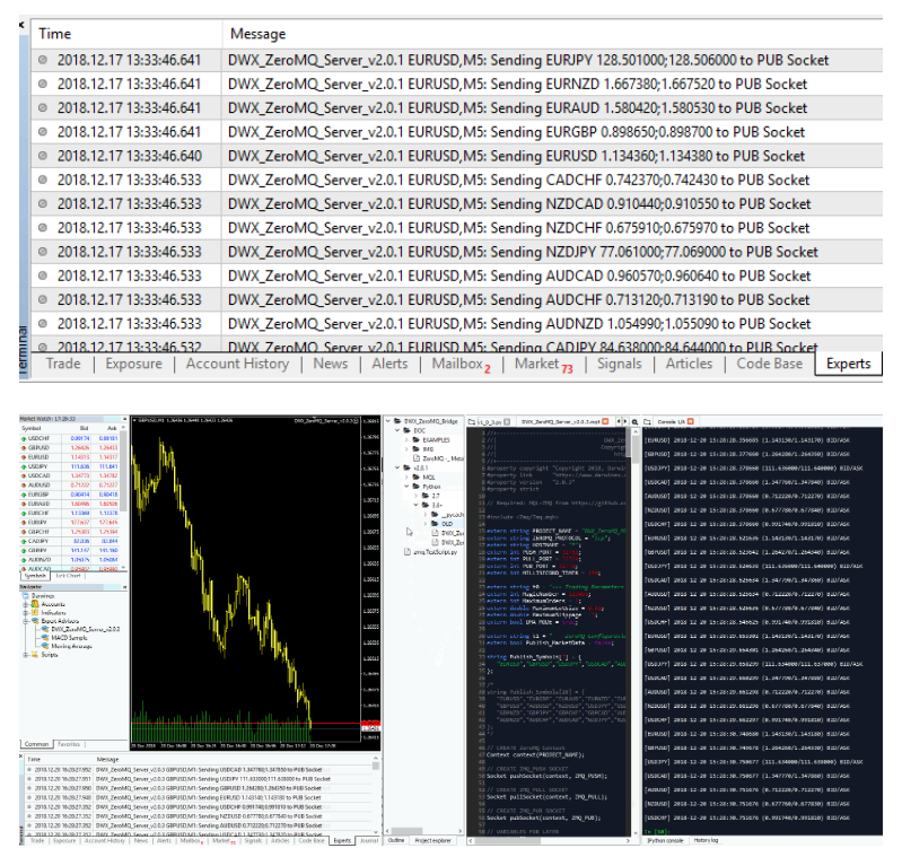

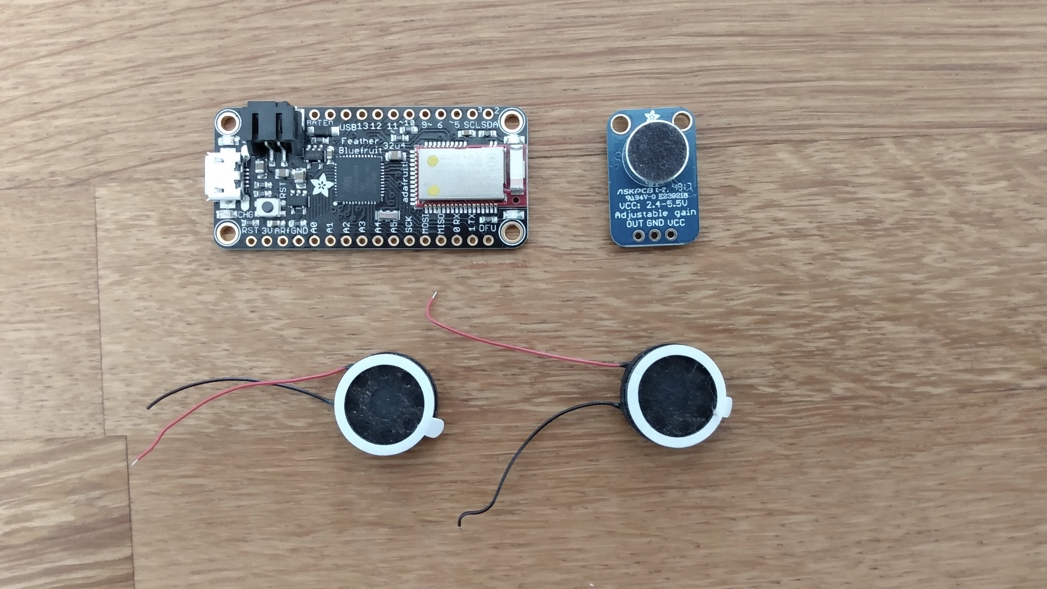

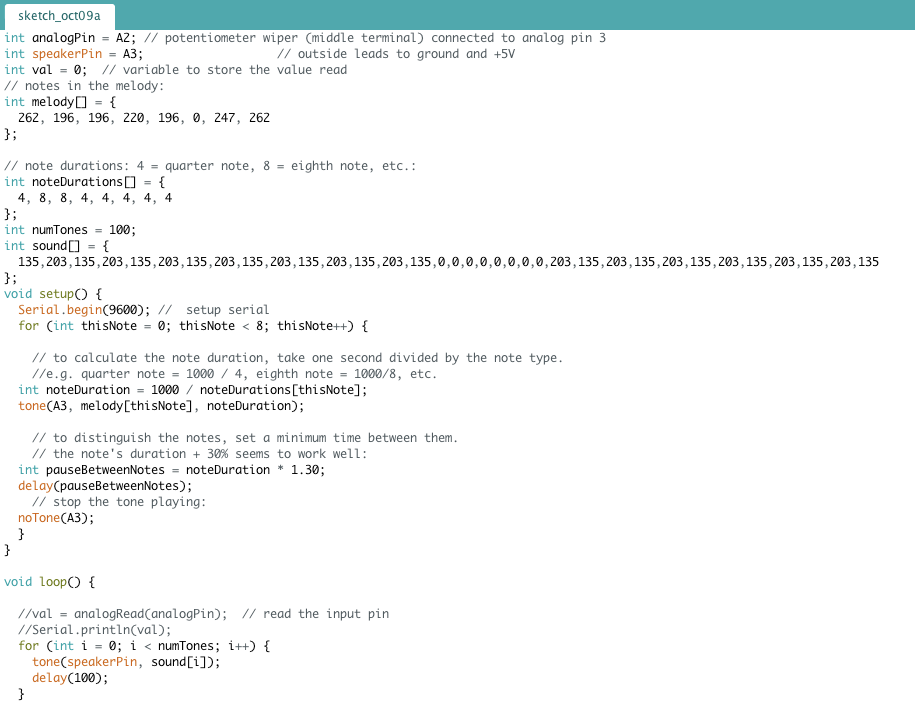

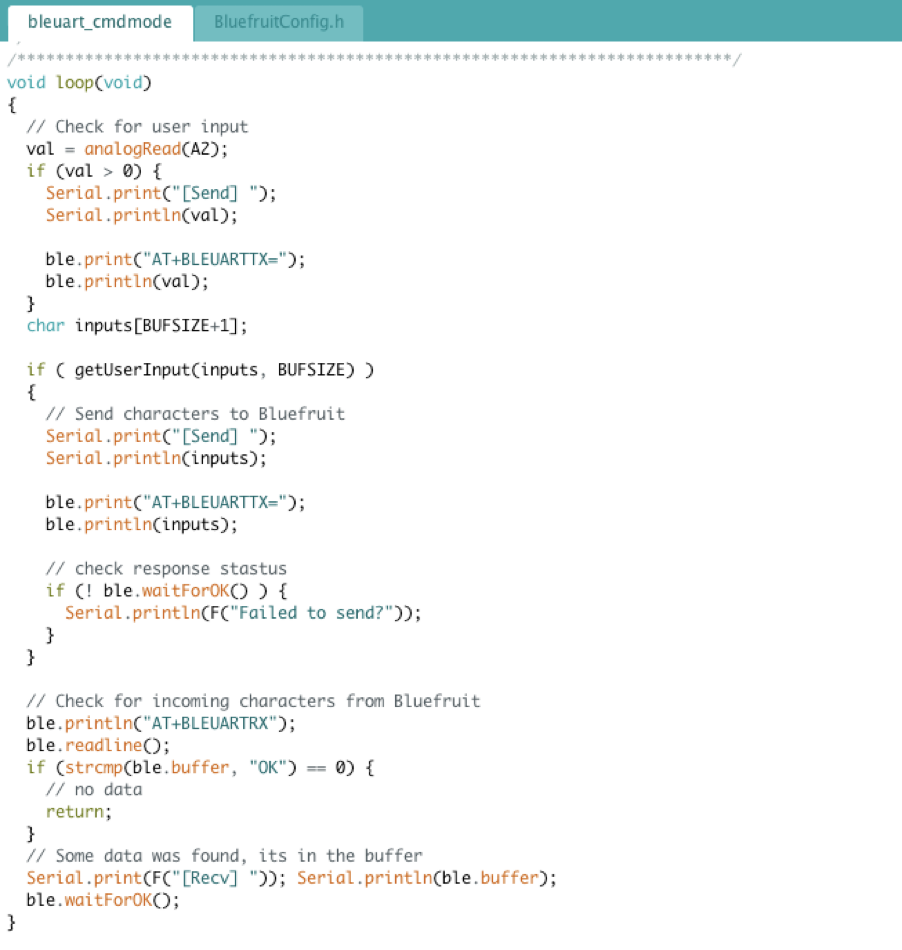

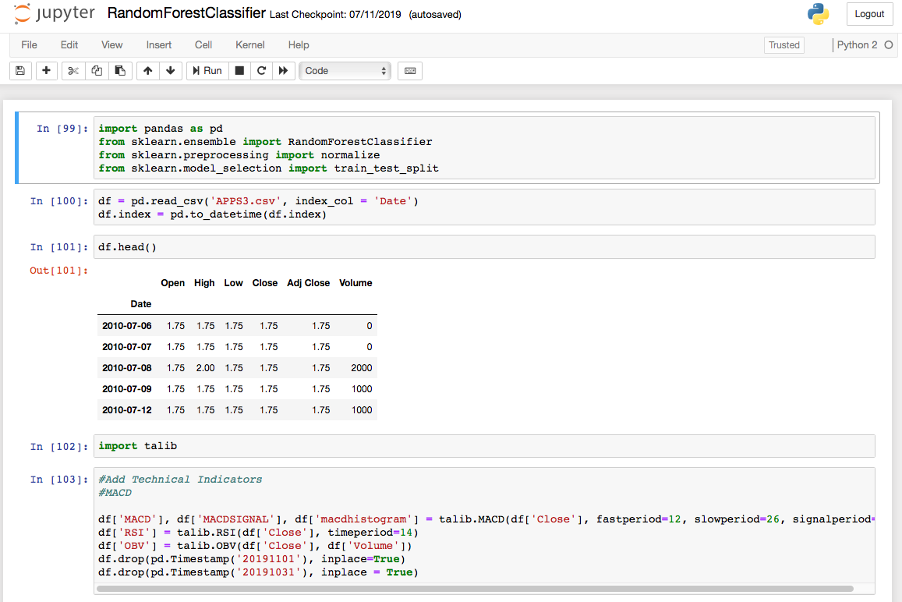

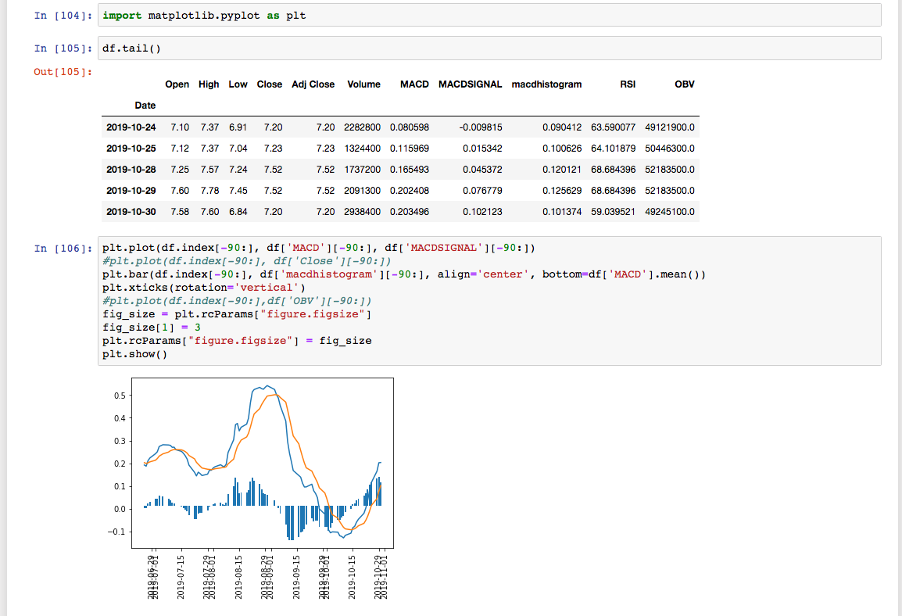

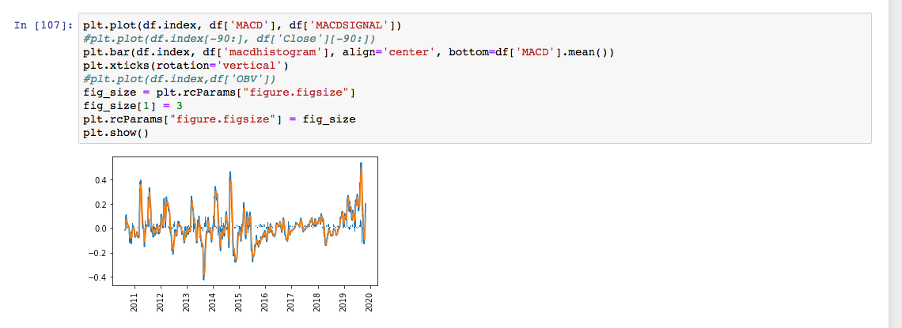

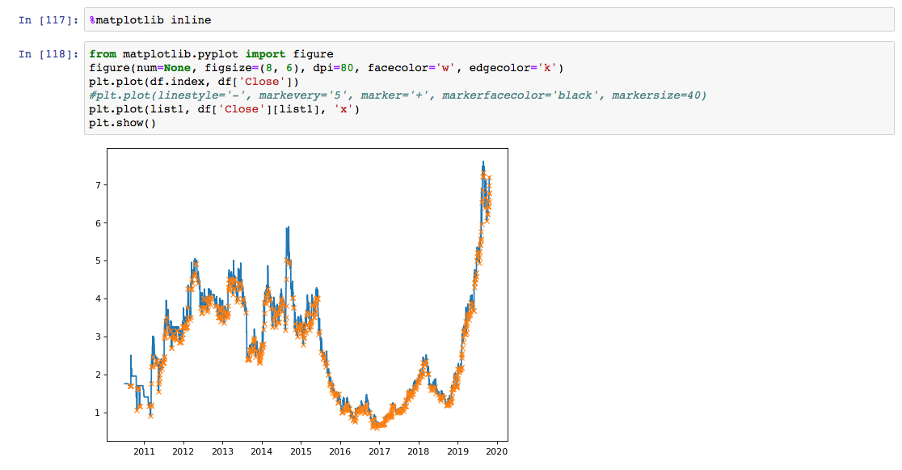

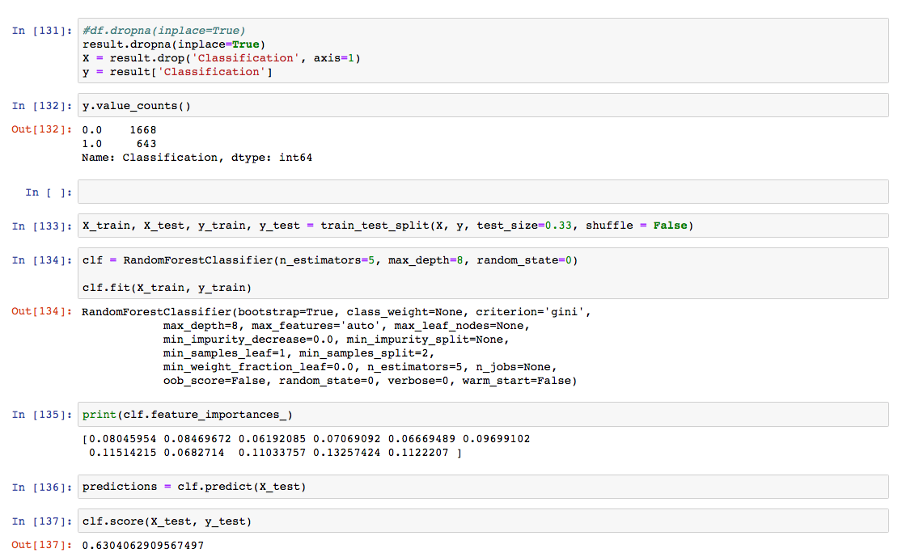

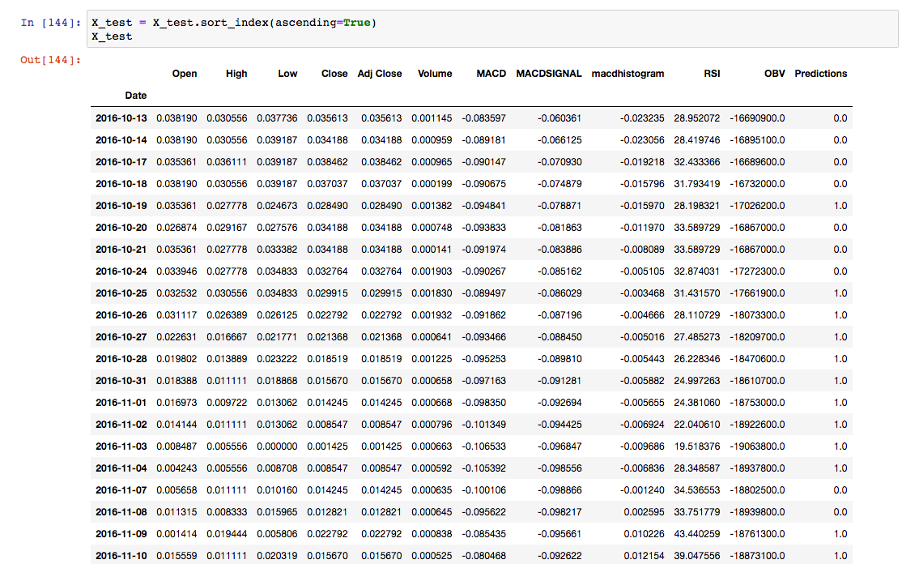

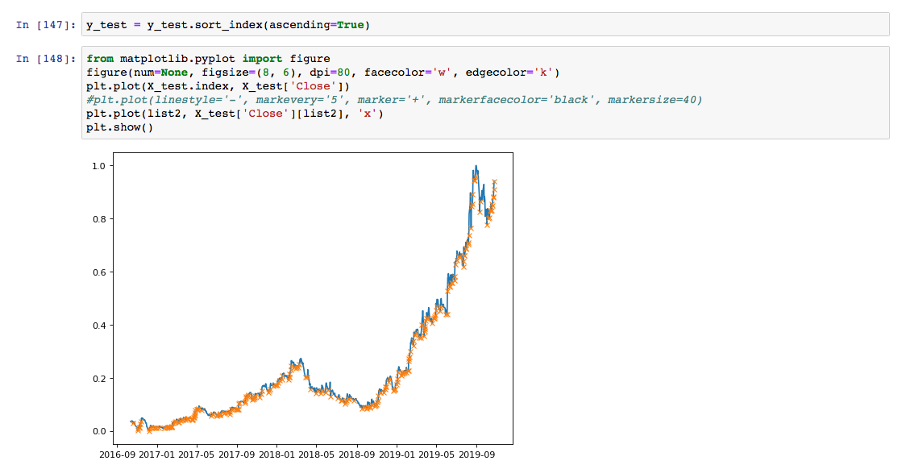

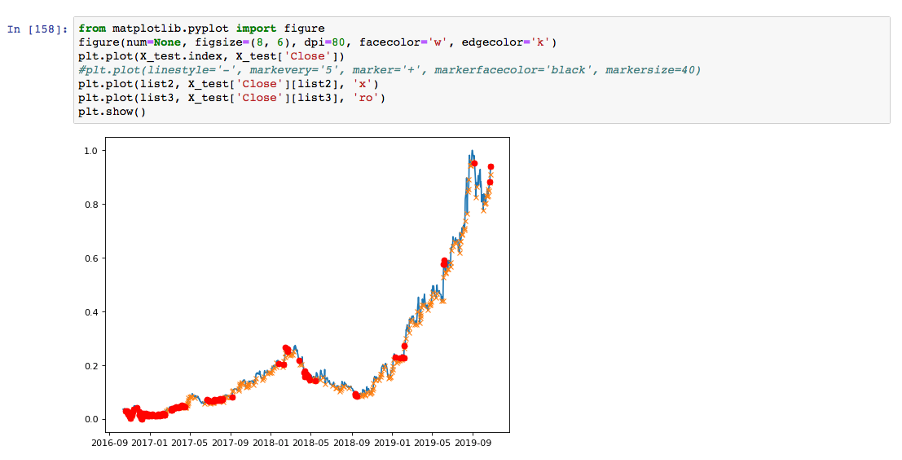

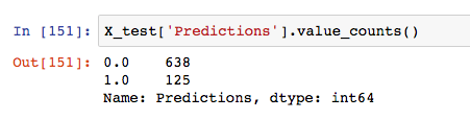

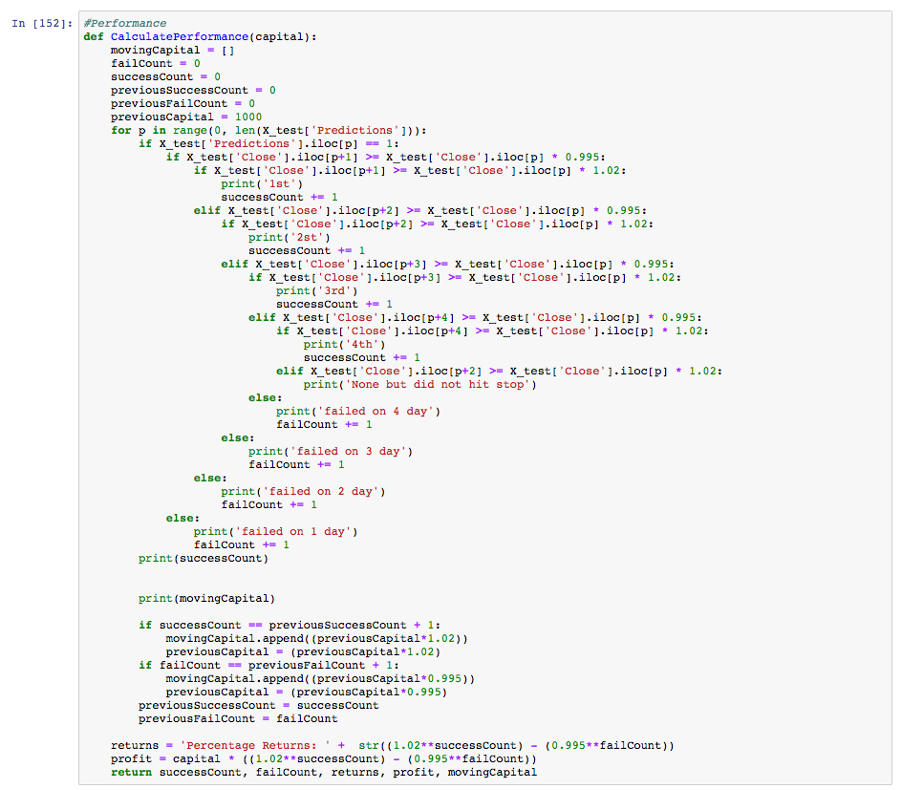

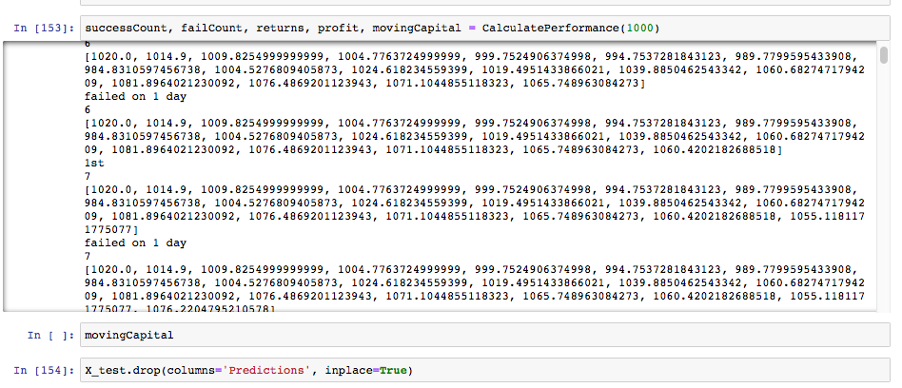

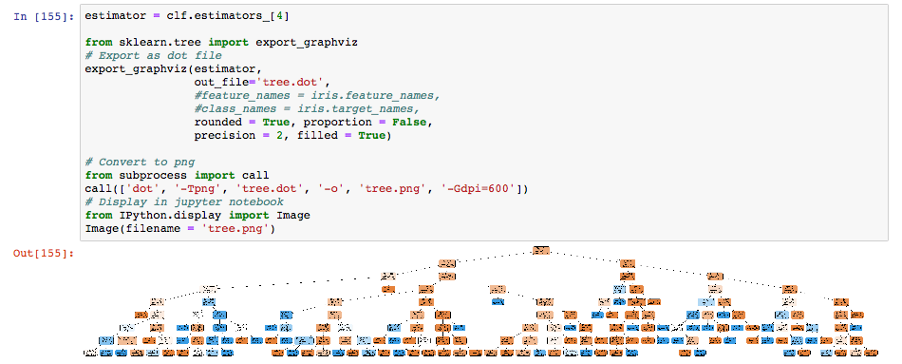

My model had a 63.04% accuracy for predicting buying opportunities in unseen future stock prices. With a 2% gain for each correct prediction and a 0.5% loss for each incorrect one, I achieved a 1,500% return within a 30-day period. However, I hadn’t accounted for the 0.50% buying/selling fee charged by brokers for small, fast-growing stocks, which rendered the system unprofitable. Despite this, I continued building the infrastructure for live trading using this model, as I thought it was an interesting project.

My model had a 63.04% accuracy for predicting buying opportunities in unseen future stock prices. With a 2% gain for each correct prediction and a 0.5% loss for each incorrect one, I achieved a 1,500% return within a 30-day period. However, I hadn’t accounted for the 0.50% buying/selling fee charged by brokers for small, fast-growing stocks, which rendered the system unprofitable. Despite this, I continued building the infrastructure for live trading using this model, as I thought it was an interesting project.